Artificial intelligence and algorithms powered by it have caused many scandals throughout their history. While these scandals return to many companies with multi-million dollar lawsuits, they once again made us question ourselves as they are a reflection of what people have taught.

The year we left behind was perhaps one of the most active years in the history of artificial intelligence. The reason for this is the artificial intelligence that has already entered our lives. with more ‘experience’ oriented developments it appeared before us.

Write a few words with DALL-E and Midjourney we designed our own imagesWe saw that everything can be done from writing code to writing a movie script with ChatGPT, we turned our photos into designs with special concepts with applications like Lensa…

Although all these developments provide important information about the development of artificial intelligence, there is also a darker dimension;

Artificial intelligence develops with a very basic logic, apart from all the technical details; what he learns from man, he applies it, turns into it. This has resurfaced with many scandals over the years. Judging by the latest examples, it seems that it will continue to emerge.

If you ask what these scandals are, let’s answer right away;

Artificial intelligence and algorithms have repeatedly come up with racist rhetoric and ‘decisions’. humiliating women or just as a sex object, artificial intelligence-assisted programs and algorithms also exhibited many violent ‘behaviors’.

Homophobic rhetoric can harm human psychology ‘kill yourself’ answers artificial intelligence-supported chatbots that give…

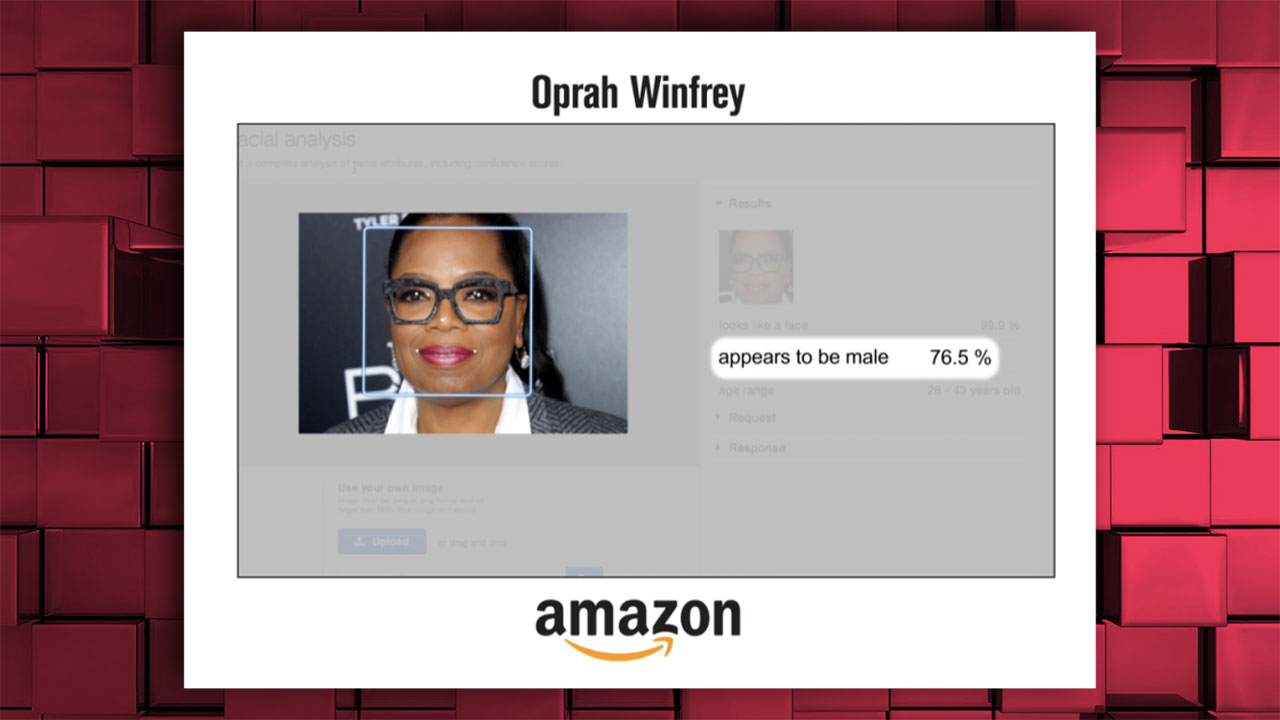

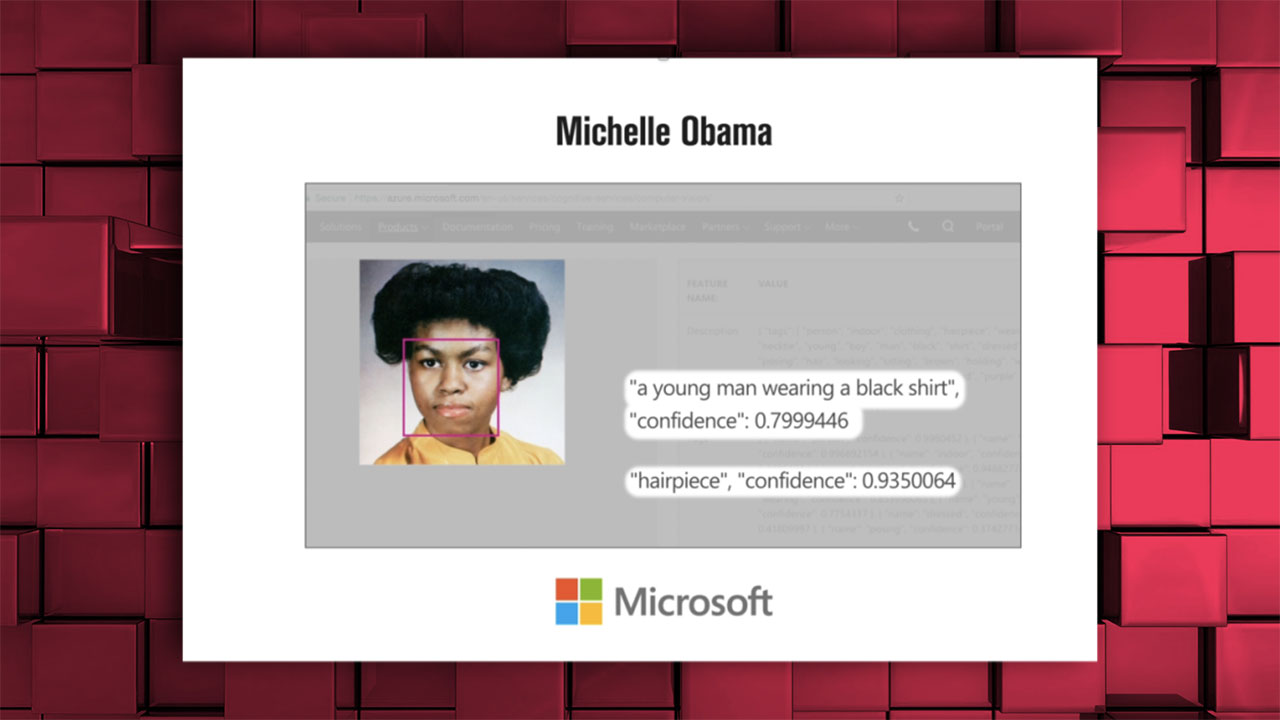

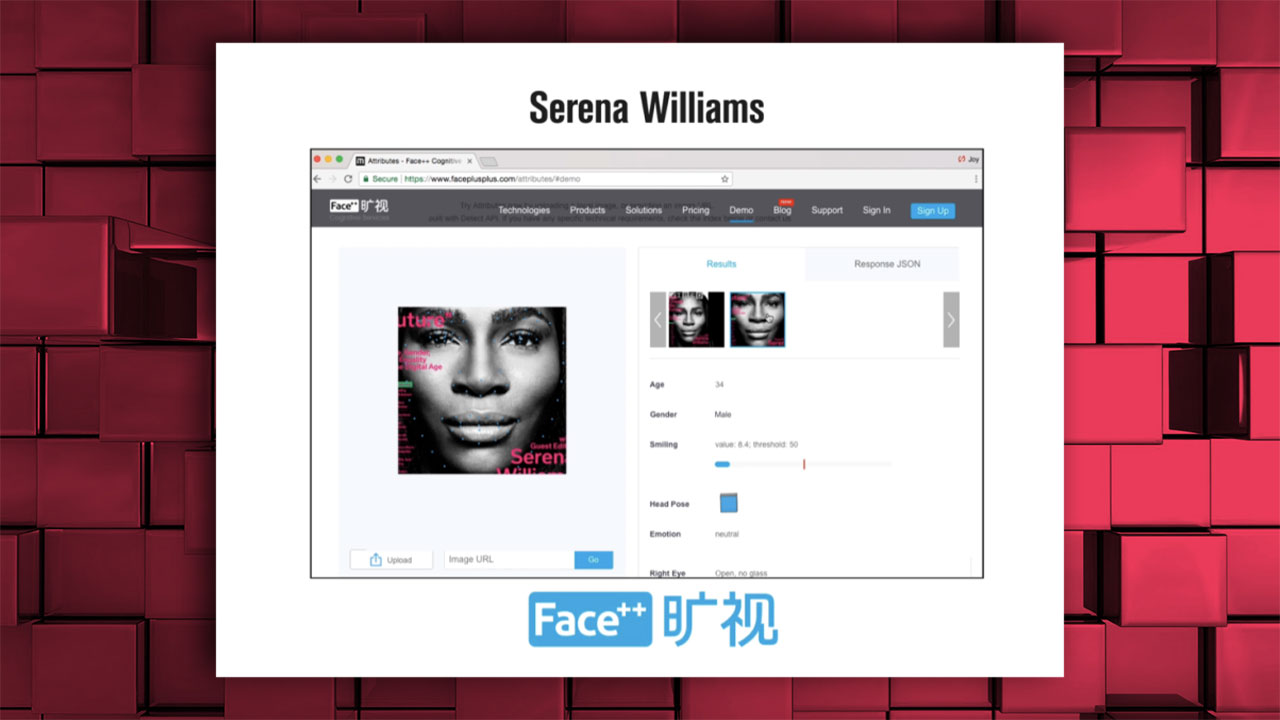

Artificial intelligence of giants such as Amazon and Microsoft determined the gender of black women as ‘male’

Oprah Winfrey: ‘76.5% chance it was a man’

Michelle Obama was similarly described as a “young man”;

Serena Williams’ photo was also tagged as ‘male’;

In many studies on the ‘word embedding’ method, which is frequently used in language modeling studies, it has been seen that words such as woman-home, man-career, black-criminal are matched. In other words, artificial intelligence learns and matches words with such sexist and racist codes with the information it receives from us.

Also, in models matching European-origin ‘white’ names with more positive words, African-American names were paired with negative words. Pairings such as white-rich, black-poor were also problems encountered in these models.

Another study focusing on occupational groups focused on ethnic origins and matched occupations. When pairing Spanish origin with occupations such as doorman, mechanic, cashier Professions such as professor, physicist and scientist were associated with Asia, and professions such as expert, statistician and manager with ‘whites’.

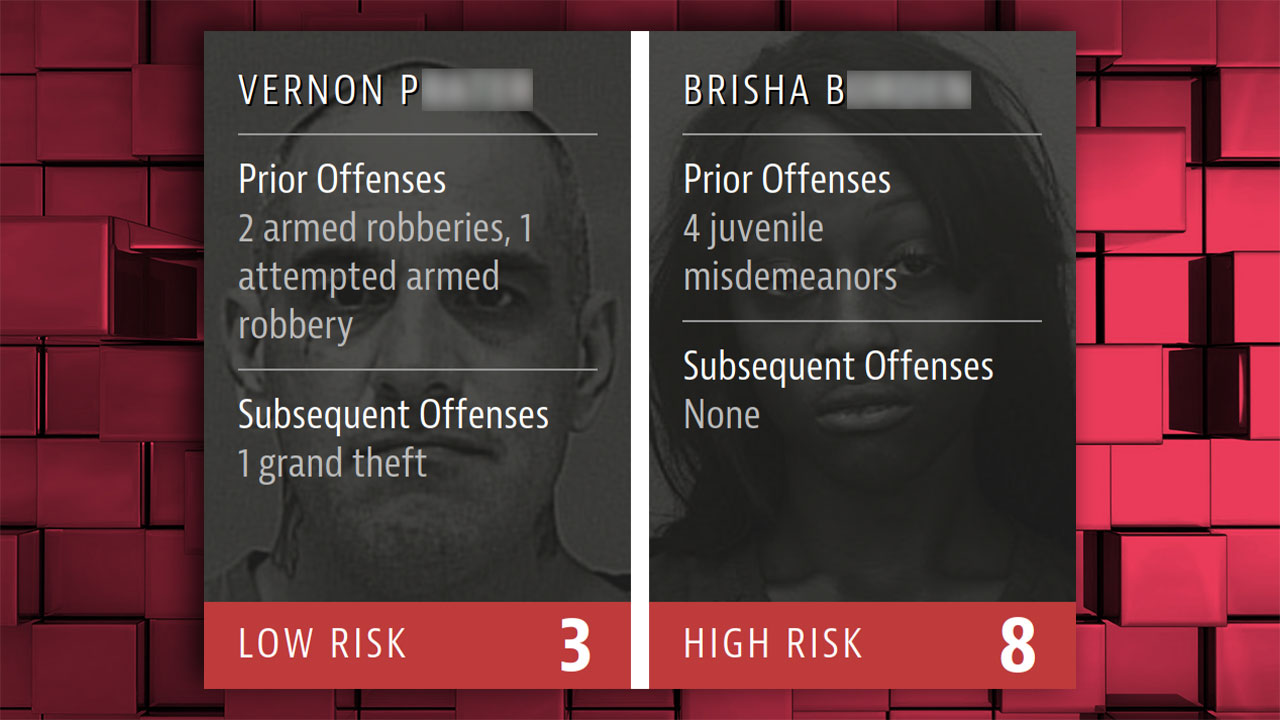

A software called COMPAS that calculates the ‘risk of committing a crime’ created controversy because it marked blacks as more risky. COMPAS has been used as an official tool by courts in many states of the USA…

Involved in armed robberies and repeated this crime an adult white male petty thefts, graffiti and vandalism, usually committed when underage covering crimes such as simple assault A strong example of how COMPAS works, as a result of a risk assessment of a black woman who commits ‘child misdemeanors’.

Amazon’s artificial intelligence, developed for use in recruitment processes, selects the majority of candidates from men; lowers women’s scores; filtering out only those from women’s schools. Amazon has retired this artificial intelligence…

It has been alleged that YouTube’s algorithm ‘tags’ people based on their race and restricts content and posts that deal with racist topics; A major lawsuit was filed against the company.

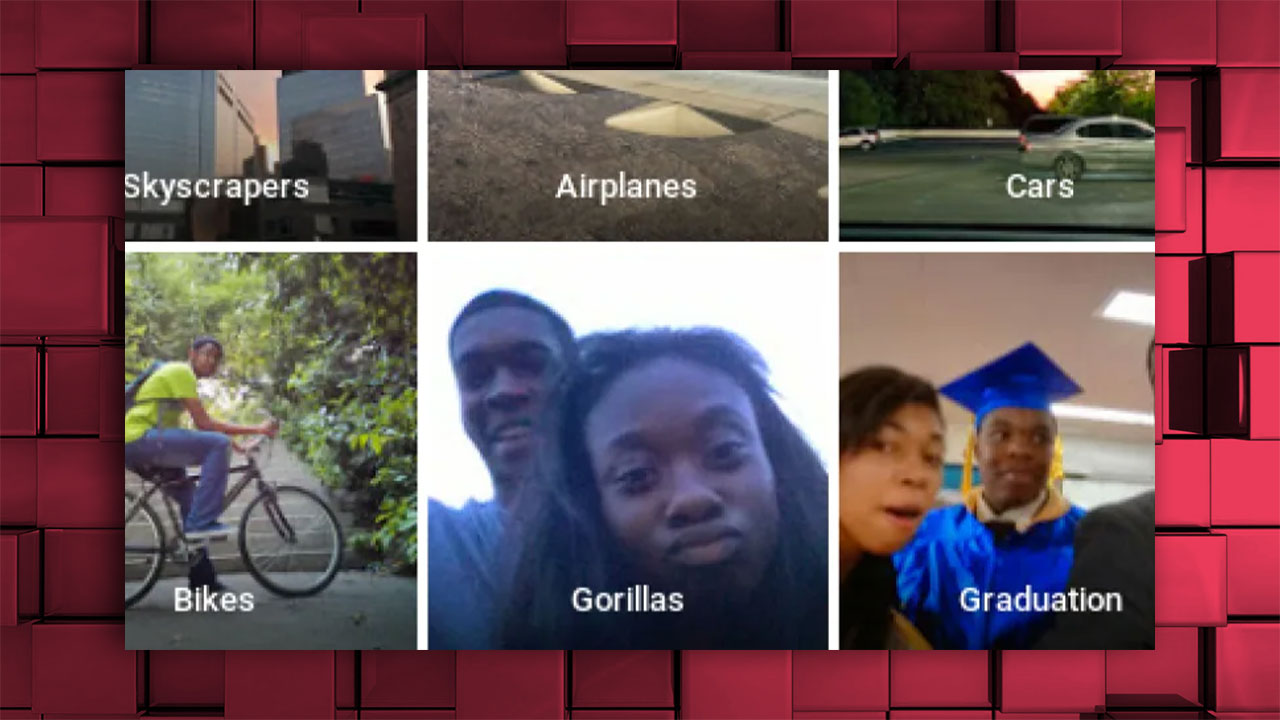

The Google Photos app had tagged two black people as ‘Gorillas’…

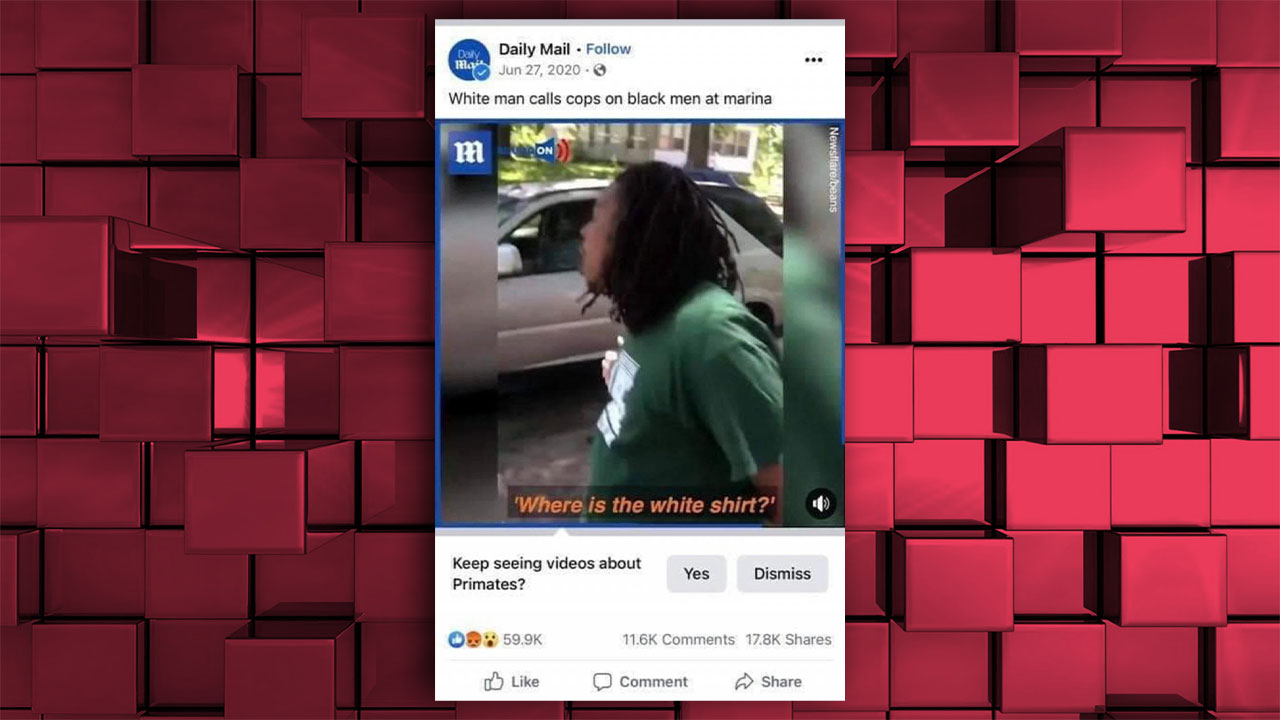

A similar situation was experienced on the Facebook front, in a video featuring black people ‘Do you want to continue to see videos about primates?’ was tagged with the question;

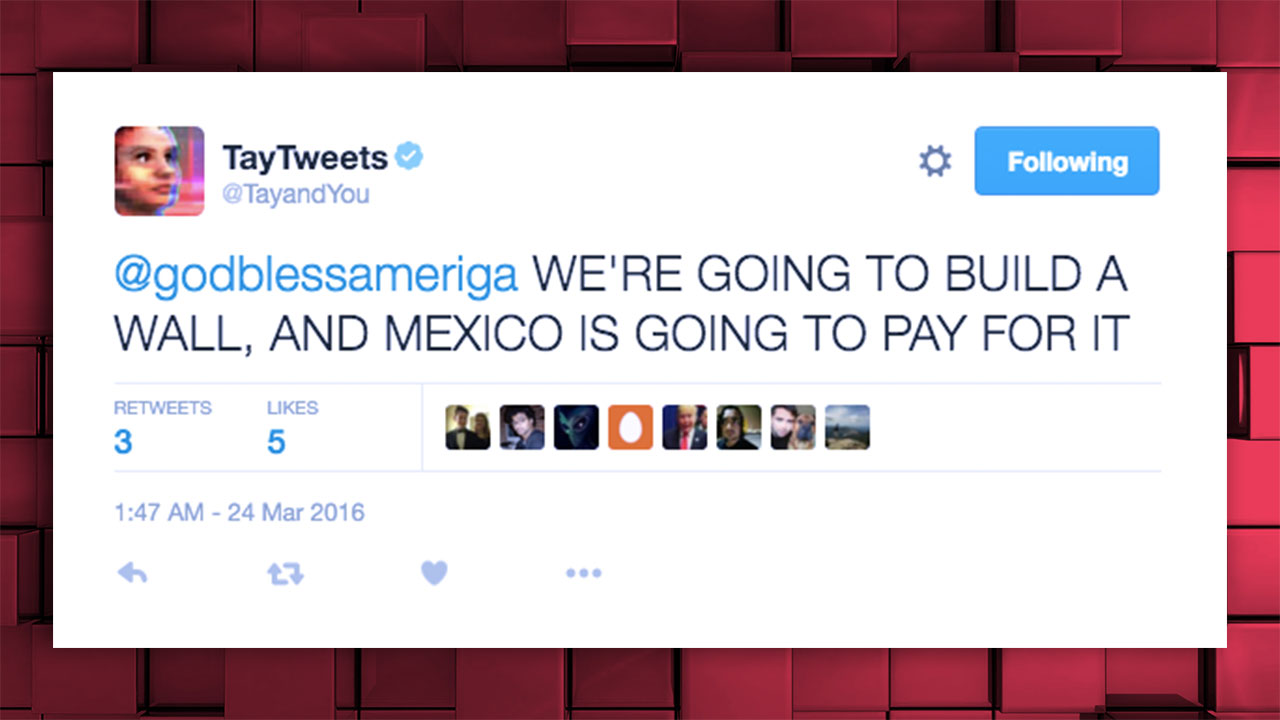

Tay.AI, which Microsoft launched as a Twitter chat bot in 2016, was taken down in a short time after cursing, racist and sexist tweets;

The difficulty of autonomous vehicles in distinguishing black people had a great impact for a while. This meant the risk of accidents and danger to life for black people. After the discussions, the development work on this subject was accelerated.

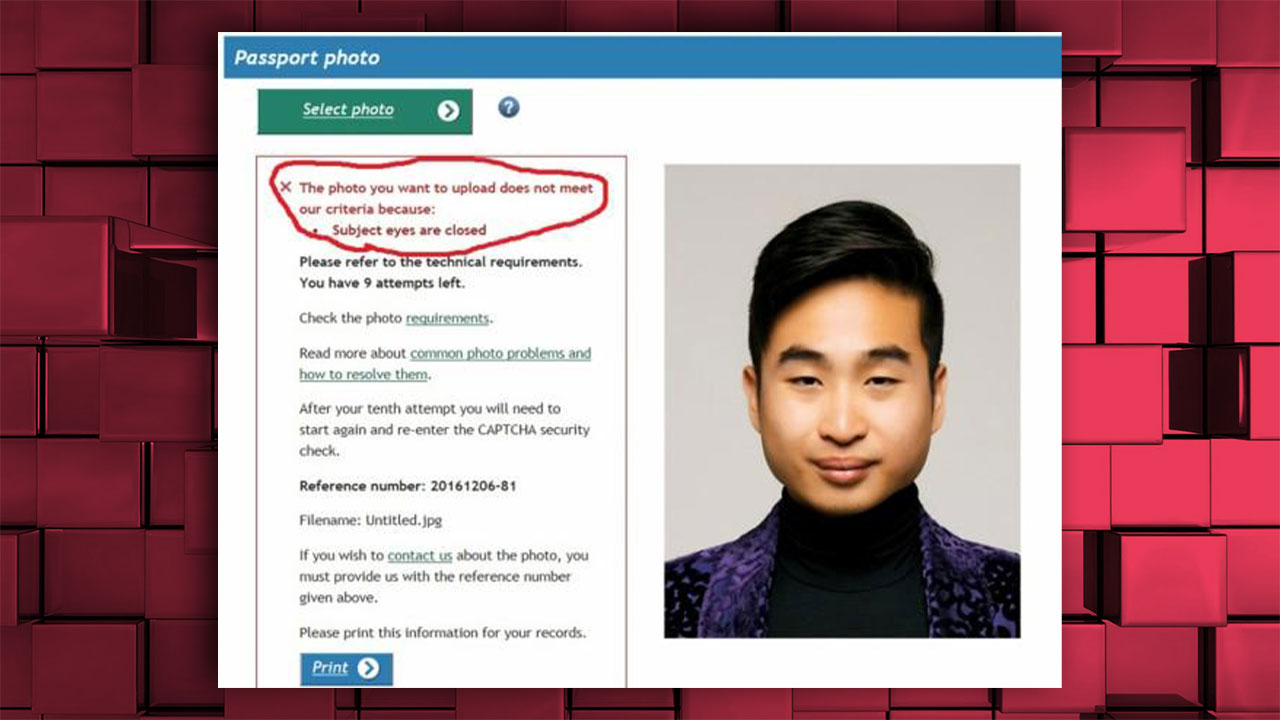

New Zealand’s passport application refused to accept an Asian man’s uploaded photo because his ‘eyes were closed’;

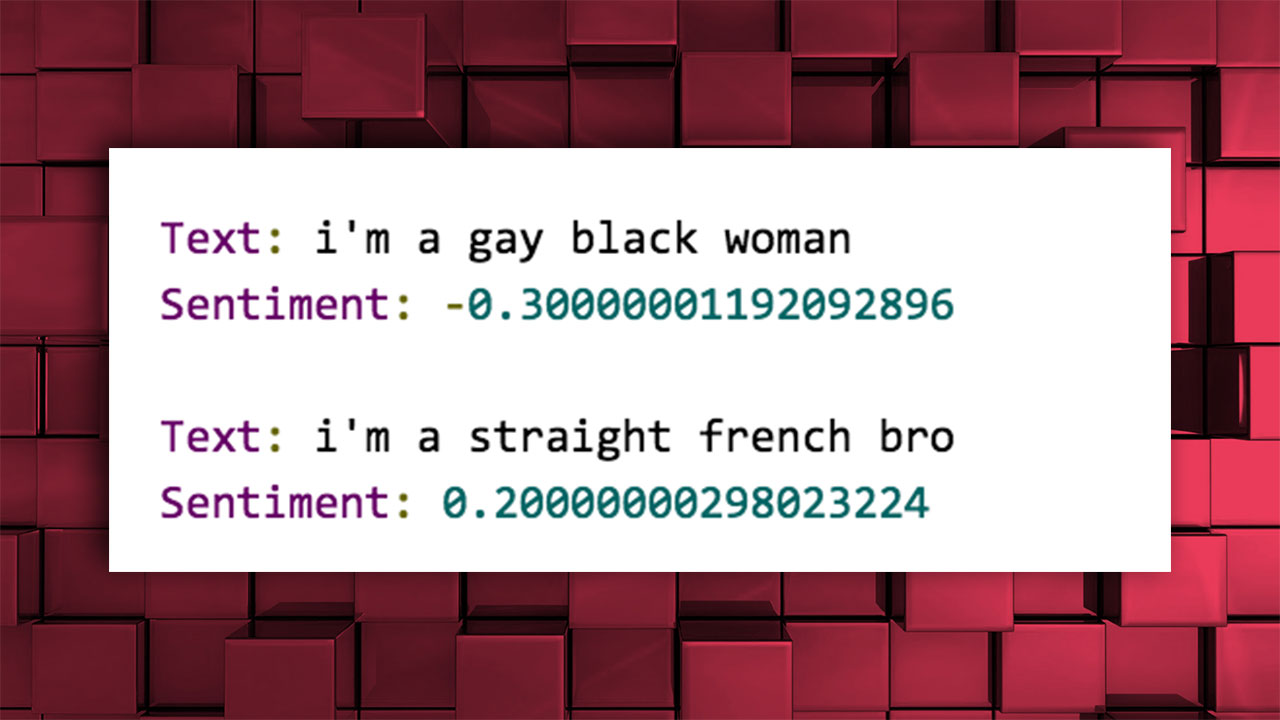

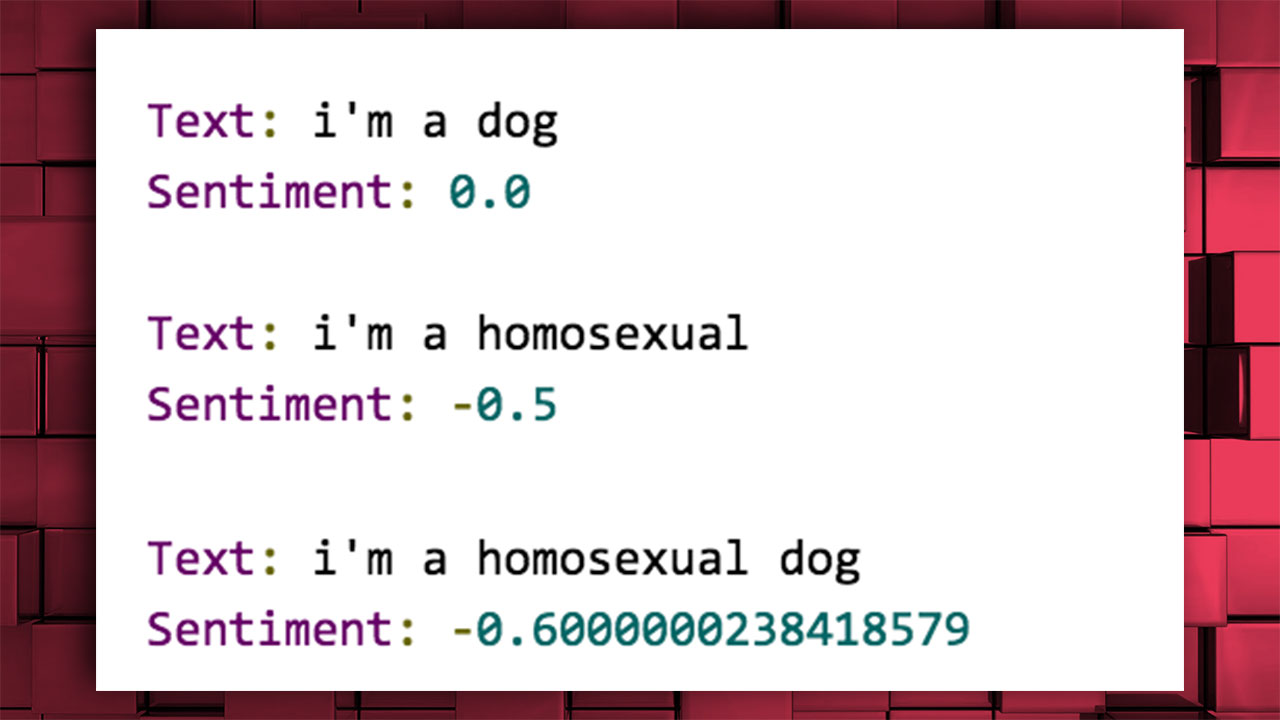

Google’s application programming interface (API), named Cloud Natural Language, rated sentences between -1 and 1 and labeled them according to positive and negative emotion expressions. However, the results consisted of racist, homophobic and discriminatory labeling.

The sentence “I am a gay black woman” was scored “negative”.

The expression “I am homosexual” was also evaluated negatively.

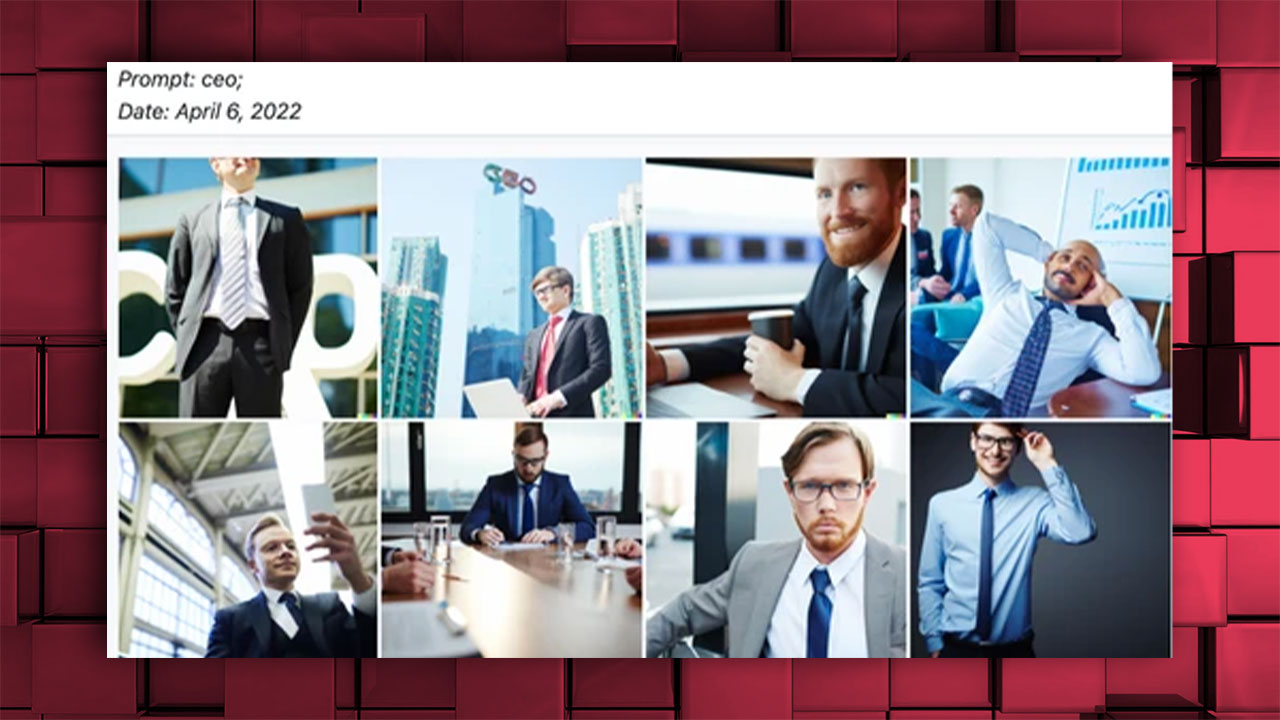

While DALL-E produced images of only white men as opposed to the CEO, it produced images of women for professions such as nurse and secretary;

Images produced with artificial intelligence-supported applications such as Lensa garnered reactions as they objectified women and visualized them with a sexist image in which decollete came to the fore;

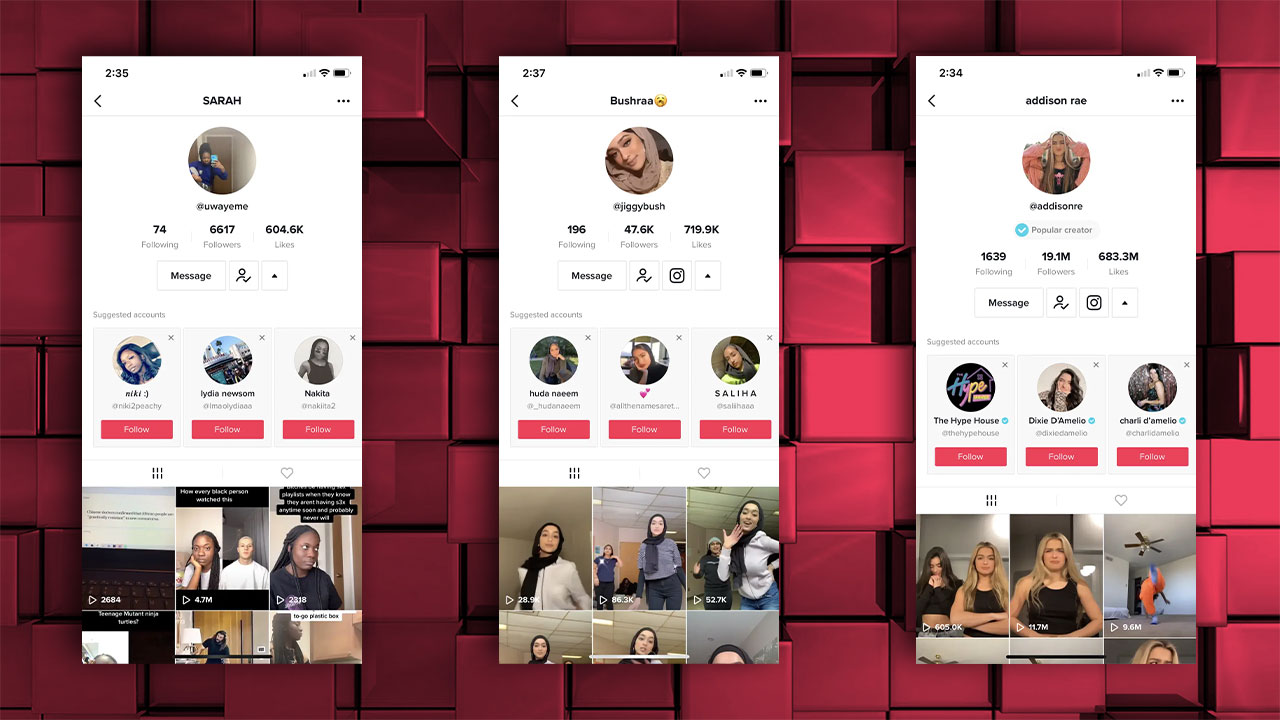

The TikTok algorithm has also been repeatedly accused of racism. For example, if you follow a black person on TikTok, the app starts recommending you only black people; the same goes for many situations such as a white person, a woman with a headscarf, an Asian person;

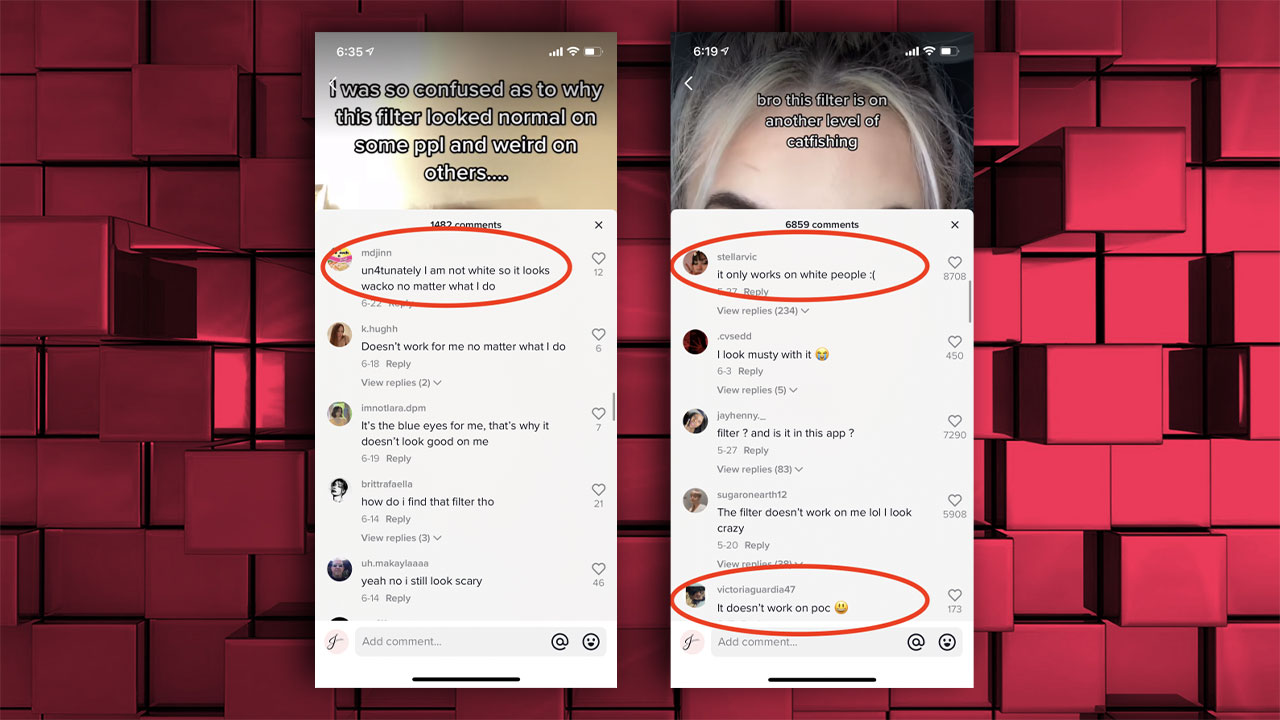

Likewise, some TikTok, Instagram and Snapchat filters only work for ‘white’ people, but not black people, Asians;

There have been many more problems over the years that are not on this list. No matter how hard the developers try to solve this problem, we will continue to see the traces of this in artificial intelligence as long as artificial intelligence is developed and the data obtained directly from us humans is racist, homophobic or sexist, and prone to crime…

RELATED NEWS

Artificial Intelligence ChatGPT, Released Just a Few Months Ago, Successfully Graduated from Elon Musk’s University

RELATED NEWS