The speed of advancement of artificial intelligence and the limitlessness of what it can do amazes and excites us every day. However, this limitlessness can also serve terrible phenomena such as pedophilia. Now we will tell you about the artificial intelligence-based “ClothOff” pedophilia network.

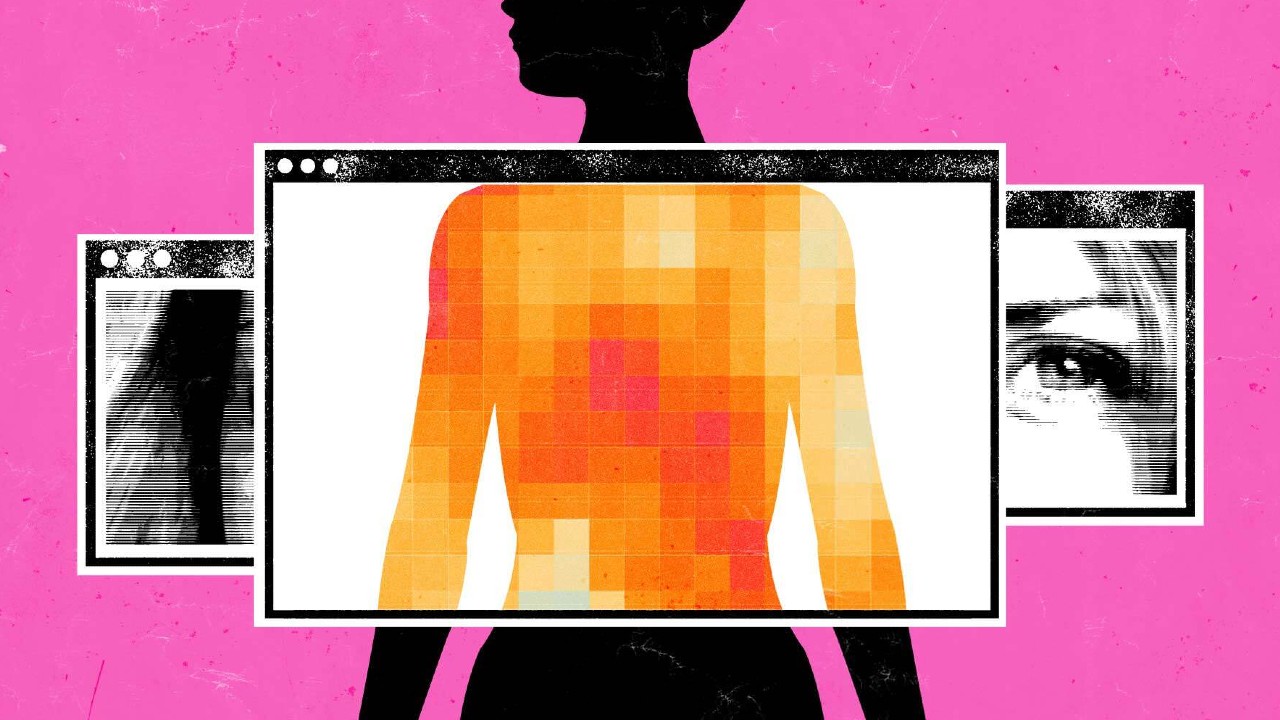

The application called “ClothOff”, which can present the naked photo of the person you want through artificial intelligence, Many underage girls are subjected to blackmail and psychological violence. caused. Nude images of the girls, which were not real but were no different from the real ones, were spread from hand to hand.

Before delving into the details of the issue, which continues to be investigated under the leadership of The Guardian What exactly is “deepfake” and what kind of things can it do? let’s see.

What is deepfake?

Deepfake is a fake or manipulated image created mostly by artificial intelligence techniques. audio recordings, videos or photographs. For example, someone’s video can be manipulated in a very realistic way to make it seem like they are saying very different things than what they say in the video.

Likewise, by using a photograph of yourself, you can be portrayed as doing something or saying something that you did not do. All this using the data of several photos or videos. can be performed flawlessly.

Let’s leave a deepfake video here:

Taylor Swift isn’t the only victim, either.

If you remember, deepfake images of Taylor Swift were on the agenda last month. Unfortunately, this does not happen to just a few people. In cities, towns and communities we don’t know; Images of many people can be used in this way.

RELATED NEWS

Searches for “Taylor Swift” Suspended on X After Fake 18+ Images of Taylor Swift Spread

So Taylor Swift It was just the tip of the iceberg. The horrific case that is the subject of our content takes place in the small town of Almendralejo, Spain.

The app called “ClothOff” started a horrific pedophilia network.

According to the news published by The Guardian on March 1, 2024, the female students in the town had a video game created by artificial intelligence. dozens of nude photos, It was circulated in a WhatsApp group set up by other children at school.

Girls who experience serious anxiety and depression because of deepfake photos, He even stopped going to school. They were having panic attacks and were being blackmailed and bullied.

The mother of one of the girls said these words: “You are shocked when you see it. The image looks completely real… If I didn’t know my daughter’s body “I would definitely think that image was real.”

The same situation was happening in New Jersey, USA.

The same events took place at a high school in New Jersey, thousands of miles away from Almendralejo. High school girls fell victim to deepfake images created by other students in their class. At the center of events in Spain and New Jersey Same app called “ClothOff” There was.

To enter ClothOff, which is visited more than 4 million times a month, you must confirm that you are over 18 years of age. It was enough to pay 8.50 euros for 25 images. When you uploaded a photo of someone, it would give you their naked version.

Those behind the terrible pedophilia network are still being investigated.

The people behind ClothOff were able to maintain anonymity well, but what The Guardian did A 6-month research It revealed the names of several people. Clues led to a brother and sister in Belarus. The company was laundering money by generating revenue through a shell company.

One of the people contacted admitted that he was the founder of ClothOff, but said that images of people under the age of 18 could not be used. Operating through businesses registered in Europe and shell companies based in London There is a complex network.

Access to the application has been blocked in many countries, but it can still be used from all over the world through various methods. How the images were created and who exactly was behind them is still being investigated.

If you would like to take a look at our other content:

RELATED NEWS

Urgent Information for Families: How Do You Know If Your Child is Being Sexually or Physically Abused?

RELATED NEWS

Pedophiles Are Involved in Artificial Intelligence: Images of Famous People Are Being “Infantilized”

RELATED NEWS