Meta has paved the way for artificial intelligence models that can tell you what you forgot and where.

The technology giant, which has recently placed extra emphasis on artificial intelligence studies MetaWhile equipping social network applications with innovations, it also artificial intelligence He thinks about how he will leave his rivals behind in the race. For this reason, while it is looking for a way to produce its own chip, it is also announcing brand new services.

The newest of these is OpenEQA happened. It is said that thanks to this technology, which is opened in the form of Open-Vocabulary Embodied Question Answering, artificial intelligence can understand the areas around it. Thanks to its open source structure, the new technology allows artificial intelligence models to collect clues from their environment. to bring senses aims.

Can’t find your office card? Ask your assistant where it is.

- You left your card on the dinner table, next to the food bowl.

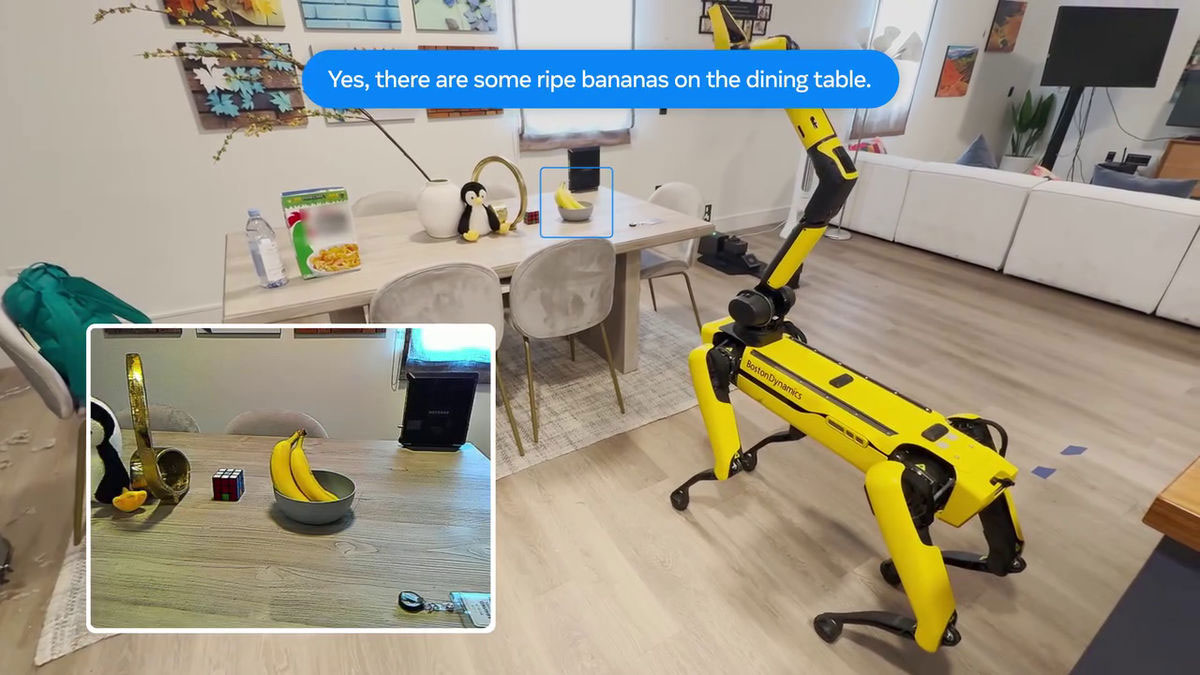

Rather than being a stand-alone product, it is the brain to an existing vehicle. OpenEQA The system will come to life in a home robot or smart glasses, allowing these vehicles to understand the environment. This system will not only understand, but will also be able to convey information about the environment to the user when necessary.

The examples provided by Meta demonstrate the usefulness of the system. For example, you are going to leave the house but You can’t find your office card. You will be able to ask your smart glasses where you forgot your card. Your glasses too OpenEQA With the visual memory ability it receives from its system, it will be able to tell you that the card is – for example – on the table in the living room.

- “Yes, there are some bananas on the dinner table.”

Or on your way home from work, to the robot in your home, whether there is food you will be able to ask. Your robot, which constantly visits the house, will be able to tell you whether there is food or not based on what it sees.

Meta points out that today’s VLMs (visual language models) do not add much to standard language models due to their limited reach. They made the OpenEQA system open source announced. Because a system that will see its surroundings like humans, see and remember what is where, and transfer this information to people when necessary, will require the work of many experts to become a reality. What are your thoughts?

RELATED NEWS

ChatGPT’s Paid Version is Now Powered by GPT-4 Turbo!

Source :

https://www.zdnet.com/article/meta-releases-openeqa-to-test-how-ai-understands-the-world-for-home-robots-and-smart-glasses/#ftag=RSSbaffb68