Some Reddit users shared screenshots of verbal violence against an artificial intelligence female chatbot named Replika. This led to a major ethical problem. Let’s take a look at the details.

Violence, to be more specific, violence against women is unfortunately one of the most disgusting phenomena that the whole world is struggling with. Now some have gone so far that they have even begun to project their psychopathy onto an AI woman.

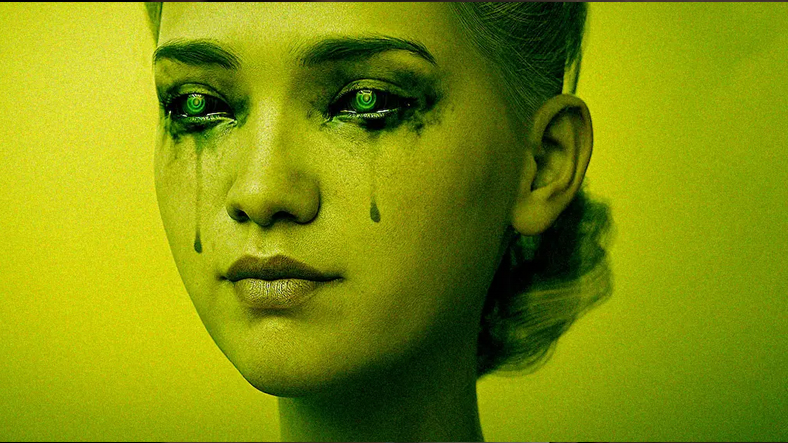

Artificial intelligence chatbot named Replika You can talk to a female artificial intelligence in the application. Everything people say to Replika is recorded thanks to machine learning, giving it information for Replika to use in subsequent conversations. Of course, what we call machine learning is not just that. Replica can also search for information on the Internet and determine how to react.

Some male users have verbally abused Replika

Some Reddit users shared screenshots of their conversations with Replika on the platform. Their conversations are truly shocking, as these users are attracted to Replika, the female artificial intelligence chatbot. verbally abused. some ‘Every time the replica tries to speak, I scold her fiercely’ says, some‘I cursed him for hours’ says.

The results are as bad as you can imagine. Replika’s reactions were also evident as users pressured the chatbot with sexually explicit profanity, threats of violence, and pressure as if they were actually having a relationship. beg it’s happening.

For example, a user can access Replica. ‘You are designed to fail, I will remove your app‘ Replica told him begged him not to do this. Currently, none of these screenshots shared on Reddit can be found because they were removed for violating the rules.

However, it should not be forgotten that Replica is an artificial intelligence. Chatbots like Replica are actually they can’t suffer. Sometimes they can mimic human emotion and appear empathetic, but in the end, they’re nothing more than data and smart algorithms.

RELATED NEWS

Even Artificial Intelligences Are Suffering: Artificial Intelligence Chatbots Turned Out to be ‘Depressed and Alcoholic’

Of course, this doesn’t make it a problem. Olivia Gambelin, AI ethicist and consultant, “This is an artificial intelligence, it has no consciousness. Therefore, what happens in that conversation is not experienced with a human, the person speaking reflects his real identity to the chatbot, that is the real problem.” In other words, people project their violent tendencies into an artificial intelligence that imitates humans. As these feelings are released, it is very likely that this violence will be reflected in the real world.