Can an autonomous car sacrifice its passengers for the good of a group of pedestrians in a situation where a collision is inevitable? Or should he protect his own passenger no matter what?

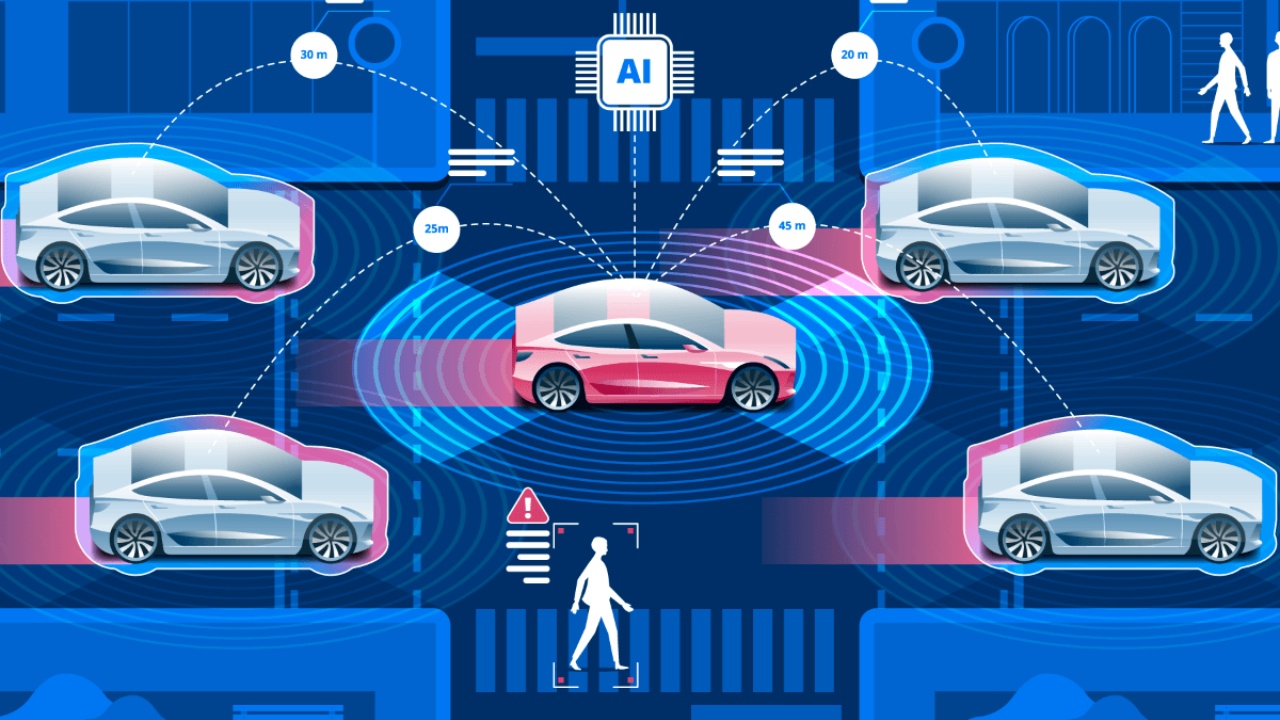

cars for a long time to be able to travel on their own Work is underway on technologies that will enable Although companies such as Tesla are the pioneers of this field, traditional automobile manufacturers are also making serious investments in this field. However, technology is not the only problem facing autonomous vehicles.

The issue of how autonomous vehicles will make decisions has been at the center of discussions for quite some time. In general “When it has to hit someone, does the car hit the baby or the elderly” Although such discussions are known, there is another important dilemma. mind blowing: Do autonomous vehicles always protect their passengers or can they sacrifice their passengers for the greater good?

How do you decide who will live?

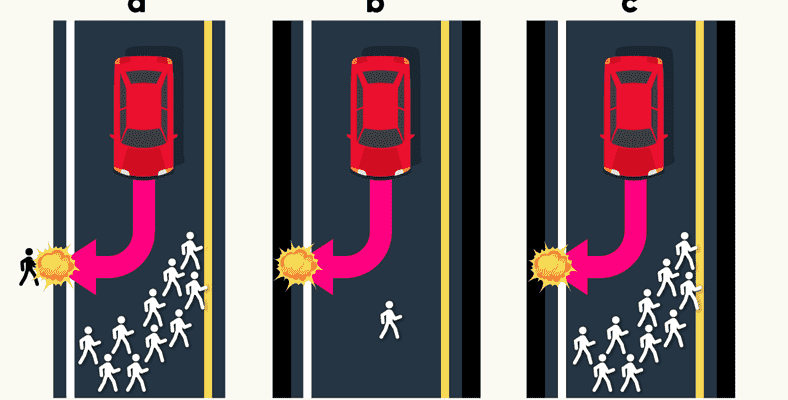

Let’s talk about the scenario used in these discussions. An autonomous vehicle is on the road on its own. When he turns a corner, he suddenly encounters a large group of people passing by. The vehicle is in this state your passenger should it be protected, or should it hit the wall to keep the number of deaths or injuries to a minimum? What would you say if you were the passenger of the vehicle?

From Tolouse Business School Jean-Francois Bonnefon He is a researcher who has been involved in moral and ethical debates on this subject with his own article. In this study, as autonomous vehicles increase, autonomous vehicles have to make such decisions. probabilities Don’t make such decisions their frequency will also increase. The decisions that vehicles will make in these situations will also play an important role in increasing the use of vehicles. According to the researchers, policy makers and manufacturers must use psychologists to determine how autonomous cars will act in this regard. applied ethics studies He needs to pave the way for it.

Even people’s decision is not always the same.

Amazon’s online public funding/research tool in research at Mechanical Turk The results of the study were discussed. Here, different scenarios were presented to the participants. One of these scenarios was the scenario we mentioned above. In addition, similar scenarios in which the number of passengers in the vehicle or the age of the passengers were different were presented to the participants.

The results weren’t all that surprising: generally speaking, people were trying to save the lives of others. to give up the driver’s life but one small detail stood out. People only made this choice if they were not drivers themselves. Another prominent point is that although it is morally correct for 75% of the participants in the study that the vehicles go off the road, the same participants only 65% he thinks that tools really should be programmed that way. In general, it is possible to say that the opinion of the participants that autonomous vehicles should act in a way to reduce the death rate in a possible accident is dominant.

There is also the autonomous vehicle paradox.

MIT Technology Review When we look at sources such as, we see that the opinion that autonomous cars are safer than human drivers is dominant. This brings us to a new dilemma. The fact that fewer people prefer smart cars because they can endanger their drivers causes more people to have accidents in traffic.

that autonomous vehicles will be the future of public transport almost no problem and it could change the concept of traveling on a global scale. Still, there are different obstacles to overcome in front of autonomous vehicles. Bringing together artificial intelligence and ethics will be one of the leading ones.

In fact, this topic has been covered a lot in science fiction.

Here, android becomes robot, autonomous vehicle, smart vacuum cleaner in the house, in general, all of these systems act by observing the basic robot law. On the other hand, this robot law was not created at the Bilmemnere Artificial Intelligence Summit. Actually legendary science fiction writer Isaac AsimovThese are the three laws that . . . These laws are as follows:

- A robot may not injure or allow a human being to be harmed.

- A robot must obey a human’s orders as long as they do not conflict with the first rule.

- A robot must protect its own existence as long as it does not conflict with the first and second rules.

As it is seen, our initial theory cannot find a place for itself in the robot law. The solution to such situations is a science fiction story by Asimov in 1985. Robots and EmpireIt has been answered in . Zeroth Law According to this law, known as the law, advanced robots will prevent the whole humanity from harming a single human being.

How do you think an autonomous vehicle should behave in this scenario?